- Details

-

Last Updated: 31 December 2016

-

Created: 31 December 2016

While there is plenty of excellent material out there about Docker, the bi-weekly lightning talks that we do at my workplace are a good opportunity to bring some basic information about this awesome technology in front of engineers in our team that would otherwise not easily be exposed to it (for instance because they mostly work on very unrelated parts of our stack, like the user interface). This particular lightning talk was the first that I did in a possibly long series about many aspects of our cloud deployments. I used the five minute lightning talk format to introduce Docker containers.

So… No one ever… had problems installing, running an application, because of the environment. True?

My audience bursts out in laughter. Of course they had these problems. In fact, we all do, with all sorts of applications, all the time. And it’s a problem that Docker wants to address, and that it is really, really good at. My disclaimer is: I am not a Docker/Linux expert, by any means. But I’m proficient in BASH and I’d say… I’ve grown to be a Docker power user.

Then, What is Docker?

When looking in the great Wikipedia, this is their definition of Docker, and let’s dissect that:

Docker is an open-source project

that automates the deployment of applications

inside software containers

by providing an additional layer of abstraction and automation of

operating-system-level virtualization on Linux.

So, what’s not to like about open source and Linux. Then, to understand deployment automation of software containers, we really need to understand this operating-system-level virtualization.

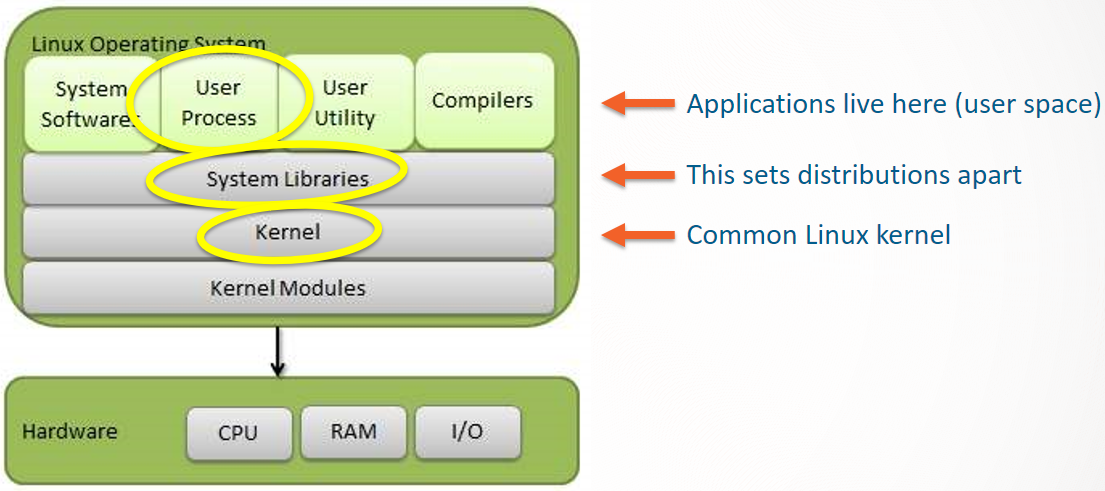

Linux Architecture

Let’s side-track into how Linux is built up. The heart of Linux is the kernel, which is common across different Linux distributions. It connects the software system to the underlying hardware, and it provides all the basic controls needed for an operating system. The Linux kernel is a separate project and it is shared between Linux distributions (Debian, Ubuntu, Red Hat, CentOS, many more).

Working on top of the kernel are system libraries. There is many well-known, common system libraries. But every Linux distribution assembles its own collection of system libraries. Together with system software, utilities and compilers, that is mostly what sets them apart. (Kernels also have some differences between distributions, from backports to features. This may come to bite you, when using Docker. But in principle, Docker relies on a highly-standardized kernel across host environments.)

Applications use the system libraries (and the kernel) to do their function. You are the user, and the applications that you are running are referred to as user processes. User processes run in “user space”, which is essentially a way of looking at how the system memory is divided. In any case: you want to run applications? Then you need yourself some user space.

Containers in User Space

User space isn’t heavily isolated at all. And since different applications share the same system libraries, dependencies can reasonably become a mess. Software containers, on the other hand, offer you:

- Isolated user space

- Isolated system libraries

- One application per container (if you’re doing it right, but let’s assume that’s the case)

- Containers aren’t affecting each other

- Host environment not affecting containers

Virtualization

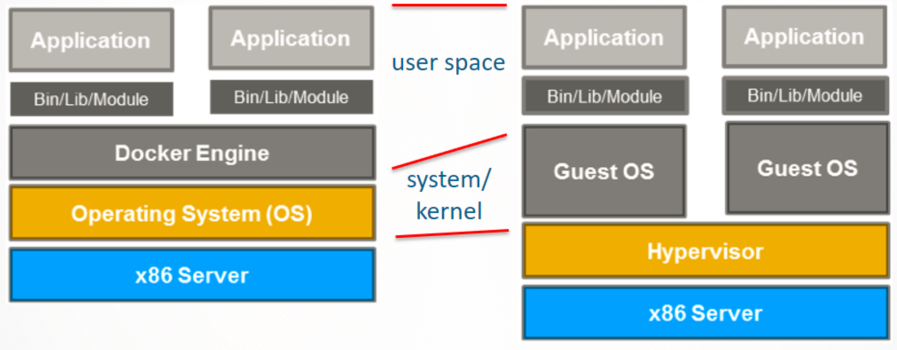

In order to understand better how that is achieved, let’s use our knowledge about apply this (new) understanding of how Linux is built-up to different virtualization strategies:

Most of us have now grown up to know and use machine virtualization (right side in the diagram), the likes of VmWare, VirtualBox, Virtual PC. These technologies, that are also great technical feats in their own right, run multiple full operating systems on the same hardware, using a thin layer of software, called a hypervisor. In that architecture, the full operating system, kernel, system libraries, and finally application are duplicated. There is a lot of duplication, this is a heavyweight solution. Contrast operation-system-level virtualization, where the operating system, the kernel is reused, and only system libraries and application are exclusive to a container. This is a lightweight solution. (This is mostly felt because the operating system doesn’t have to boot for a container to start. The actual kernel size on disk isn’t much of a saving, although Docker images are often built to be a lot leaner than their full operating system counterparts.)

Containers

Meanwhile, the fact that containers in operating-system-level virtualization have their own system libraries means that they represent a specific Linux distribution (since the system libraries was what set Linux distributions apart). This is an amazing feature of Docker containers: regardless of the Linux distribution of the host environment, you can run any Linux distribution in the container, giving the application a very reliable set of system libraries to depend on. You can easily run a Debian container inside a Red Hat or Ubuntu host environment, or the other way around: a Ubuntu container on a Debian host environment, et cetera.

Containers also contain a reliable copy of all the libraries the application requires, and the application itself, so that it is fully ready for execution without environmental/dependency issues. Containers do not ship their own Linux kernel: that one is shared from the host environment.

Conclusion

Docker containers offer isolated applications. While they share the hardware and kernel of the host application, they exclusively own system libraries and user space, you can easily have e.g. Debian on top of CentOS. You would run one application per container, lightweight, and isolated from other containers, and from the host environment.

This post was originally published on Jama’s blog, on December 21st 2016.